使用 KerasHub 进行语义分割

作者: Sachin Prasad, Divyashree Sreepathihalli, Ian Stenbit

创建日期 2024/10/11

最后修改日期 2024/10/22

描述: 使用 KerasHub 进行 DeepLabV3 训练和推理。

背景

语义分割是一种计算机视觉任务,它为图像中的每个像素分配一个类别标签,例如“人”、“自行车”或“背景”,从而有效地将图像划分为对应于不同对象类别或类别的区域。

KerasHub 提供 DeepLabv3、DeepLabv3+、SegFormer 等模型用于语义分割。

本指南演示如何使用 KerasHub 的 Google 开发的用于图像语义分割的 DeepLabv3+ 模型进行微调和使用。其架构结合了空洞卷积、上下文信息聚合和强大的骨干网络,以实现准确而详细的语义分割。

DeepLabv3+ 通过添加一个简单而有效的解码器模块来改进分割结果,尤其是在对象边界处,从而扩展了 DeepLabv3。这两种模型在各种图像分割基准测试上都取得了最先进的结果。

参考文献

具有空洞可分离卷积的编码器-解码器用于语义图像分割 重新思考用于语义图像分割的空洞卷积

设置和导入

让我们安装依赖项并导入必要的模块。

要运行本教程,您需要安装以下软件包

keras-hubkeras

!pip install -q --upgrade keras-hub

!pip install -q --upgrade keras

安装 keras 和 keras-hub 后,为 keras 设置后端。本指南可以使用任何后端(Tensorflow、JAX、PyTorch)运行。

import os

os.environ["KERAS_BACKEND"] = "jax"

import keras

from keras import ops

import keras_hub

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

使用预训练的 DeepLabv3+ 模型执行语义分割

KerasHub 语义分割 API 的最高级别 API 是 keras_hub.models API。此 API 包括完全预训练的语义分割模型,例如 keras_hub.models.DeepLabV3ImageSegmenter。

让我们开始构建一个在 Pascal VOC 数据集上预训练的 DeepLabv3 模型。此外,定义模型的预处理函数来预处理图像和标签。注意: 默认情况下,KerasHub 中的 from_preset() 方法会加载具有所有类别(此例中为 21 个类别)的预训练任务权重。

model = keras_hub.models.DeepLabV3ImageSegmenter.from_preset(

"deeplab_v3_plus_resnet50_pascalvoc"

)

image_converter = keras_hub.layers.DeepLabV3ImageConverter(

image_size=(512, 512),

interpolation="bilinear",

)

preprocessor = keras_hub.models.DeepLabV3ImageSegmenterPreprocessor(image_converter)

让我们可视化此预训练模型的结果

filepath = keras.utils.get_file(

origin="https://storage.googleapis.com/keras-cv/models/paligemma/cow_beach_1.png"

)

image = keras.utils.load_img(filepath)

image = np.array(image)

image = preprocessor(image)

image = keras.ops.expand_dims(image, axis=0)

preds = ops.expand_dims(ops.argmax(model.predict(image), axis=-1), axis=-1)

def plot_segmentation(original_image, predicted_mask):

plt.figure(figsize=(5, 5))

plt.subplot(1, 2, 1)

plt.imshow(original_image[0] / 255)

plt.axis("off")

plt.subplot(1, 2, 2)

plt.imshow(predicted_mask[0])

plt.axis("off")

plt.tight_layout()

plt.show()

plot_segmentation(image, preds)

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 5s/步

1/1 ━━━━━━━━━━━━━━━━━━━━ 5s 5s/步

训练自定义语义分割模型

在本指南中,我们将组装一个完整的 KerasHub DeepLabV3 语义分割模型的训练流程。这包括数据加载、增强、训练、指标评估和推理!

下载数据

我们下载 Pascal VOC 2012 数据集,并提供额外的注释 通过逆检测器进行语义轮廓,并将其分割为训练数据集 train_ds 和评估数据集 eval_ds。

# @title helper functions

import logging

import multiprocessing

from builtins import open

import os.path

import random

import xml

import tensorflow_datasets as tfds

VOC_URL = "https://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar"

SBD_URL = "https://www2.eecs.berkeley.edu/Research/Projects/CS/vision/grouping/semantic_contours/benchmark.tgz"

# Note that this list doesn't contain the background class. In the

# classification use case, the label is 0 based (aeroplane -> 0), whereas in

# segmentation use case, the 0 is reserved for background, so aeroplane maps to

# 1.

CLASSES = [

"aeroplane",

"bicycle",

"bird",

"boat",

"bottle",

"bus",

"car",

"cat",

"chair",

"cow",

"diningtable",

"dog",

"horse",

"motorbike",

"person",

"pottedplant",

"sheep",

"sofa",

"train",

"tvmonitor",

]

# This is used to map between string class to index.

CLASS_TO_INDEX = {name: index for index, name in enumerate(CLASSES)}

# For the mask data in the PNG file, the encoded raw pixel value need to be

# converted to the proper class index. In the following map, [0, 0, 0] will be

# convert to 0, and [128, 0, 0] will be converted to 1, so on so forth. Also

# note that the mask class is 1 base since class 0 is reserved for the

# background. The [128, 0, 0] (class 1) is mapped to `aeroplane`.

VOC_PNG_COLOR_VALUE = [

[0, 0, 0],

[128, 0, 0],

[0, 128, 0],

[128, 128, 0],

[0, 0, 128],

[128, 0, 128],

[0, 128, 128],

[128, 128, 128],

[64, 0, 0],

[192, 0, 0],

[64, 128, 0],

[192, 128, 0],

[64, 0, 128],

[192, 0, 128],

[64, 128, 128],

[192, 128, 128],

[0, 64, 0],

[128, 64, 0],

[0, 192, 0],

[128, 192, 0],

[0, 64, 128],

]

# Will be populated by maybe_populate_voc_color_mapping() below.

VOC_PNG_COLOR_MAPPING = None

def maybe_populate_voc_color_mapping():

"""Lazy creation of VOC_PNG_COLOR_MAPPING, which could take 64M memory."""

global VOC_PNG_COLOR_MAPPING

if VOC_PNG_COLOR_MAPPING is None:

VOC_PNG_COLOR_MAPPING = [0] * (256**3)

for i, colormap in enumerate(VOC_PNG_COLOR_VALUE):

VOC_PNG_COLOR_MAPPING[

(colormap[0] * 256 + colormap[1]) * 256 + colormap[2]

] = i

# There is a special mapping with [224, 224, 192] -> 255

VOC_PNG_COLOR_MAPPING[224 * 256 * 256 + 224 * 256 + 192] = 255

VOC_PNG_COLOR_MAPPING = tf.constant(VOC_PNG_COLOR_MAPPING)

return VOC_PNG_COLOR_MAPPING

def parse_annotation_data(annotation_file_path):

"""Parse the annotation XML file for the image.

The annotation contains the metadata, as well as the object bounding box

information.

"""

with open(annotation_file_path, "r") as f:

root = xml.etree.ElementTree.parse(f).getroot()

size = root.find("size")

width = int(size.find("width").text)

height = int(size.find("height").text)

objects = []

for obj in root.findall("object"):

# Get object's label name.

label = CLASS_TO_INDEX[obj.find("name").text.lower()]

# Get objects' pose name.

pose = obj.find("pose").text.lower()

is_truncated = obj.find("truncated").text == "1"

is_difficult = obj.find("difficult").text == "1"

bndbox = obj.find("bndbox")

xmax = int(bndbox.find("xmax").text)

xmin = int(bndbox.find("xmin").text)

ymax = int(bndbox.find("ymax").text)

ymin = int(bndbox.find("ymin").text)

objects.append(

{

"label": label,

"pose": pose,

"bbox": [ymin, xmin, ymax, xmax],

"is_truncated": is_truncated,

"is_difficult": is_difficult,

}

)

return {"width": width, "height": height, "objects": objects}

def get_image_ids(data_dir, split):

"""To get image ids from the "train", "eval" or "trainval" files of VOC data."""

data_file_mapping = {

"train": "train.txt",

"eval": "val.txt",

"trainval": "trainval.txt",

}

with open(

os.path.join(data_dir, "ImageSets", "Segmentation", data_file_mapping[split]),

"r",

) as f:

image_ids = f.read().splitlines()

logging.info(f"Received {len(image_ids)} images for {split} dataset.")

return image_ids

def get_sbd_image_ids(data_dir, split):

"""To get image ids from the "sbd_train", "sbd_eval" from files of SBD data."""

data_file_mapping = {"sbd_train": "train.txt", "sbd_eval": "val.txt"}

with open(

os.path.join(data_dir, data_file_mapping[split]),

"r",

) as f:

image_ids = f.read().splitlines()

logging.info(f"Received {len(image_ids)} images for {split} dataset.")

return image_ids

def parse_single_image(image_file_path):

"""Creates metadata of VOC images and path."""

data_dir, image_file_name = os.path.split(image_file_path)

data_dir = os.path.normpath(os.path.join(data_dir, os.path.pardir))

image_id, _ = os.path.splitext(image_file_name)

class_segmentation_file_path = os.path.join(

data_dir, "SegmentationClass", image_id + ".png"

)

object_segmentation_file_path = os.path.join(

data_dir, "SegmentationObject", image_id + ".png"

)

annotation_file_path = os.path.join(data_dir, "Annotations", image_id + ".xml")

image_annotations = parse_annotation_data(annotation_file_path)

result = {

"image/filename": image_id + ".jpg",

"image/file_path": image_file_path,

"segmentation/class/file_path": class_segmentation_file_path,

"segmentation/object/file_path": object_segmentation_file_path,

}

result.update(image_annotations)

# Labels field should be same as the 'object.label'

labels = list(set([o["label"] for o in result["objects"]]))

result["labels"] = sorted(labels)

return result

def parse_single_sbd_image(image_file_path):

"""Creates metadata of SBD images and path."""

data_dir, image_file_name = os.path.split(image_file_path)

data_dir = os.path.normpath(os.path.join(data_dir, os.path.pardir))

image_id, _ = os.path.splitext(image_file_name)

class_segmentation_file_path = os.path.join(data_dir, "cls", image_id + ".mat")

object_segmentation_file_path = os.path.join(data_dir, "inst", image_id + ".mat")

result = {

"image/filename": image_id + ".jpg",

"image/file_path": image_file_path,

"segmentation/class/file_path": class_segmentation_file_path,

"segmentation/object/file_path": object_segmentation_file_path,

}

return result

def build_metadata(data_dir, image_ids):

"""Transpose the metadata which convert from list of dict to dict of list."""

# Parallel process all the images.

image_file_paths = [

os.path.join(data_dir, "JPEGImages", i + ".jpg") for i in image_ids

]

pool_size = 10 if len(image_ids) > 10 else len(image_ids)

with multiprocessing.Pool(pool_size) as p:

metadata = p.map(parse_single_image, image_file_paths)

keys = [

"image/filename",

"image/file_path",

"segmentation/class/file_path",

"segmentation/object/file_path",

"labels",

"width",

"height",

]

result = {}

for key in keys:

values = [value[key] for value in metadata]

result[key] = values

# The ragged objects need some special handling

for key in ["label", "pose", "bbox", "is_truncated", "is_difficult"]:

values = []

objects = [value["objects"] for value in metadata]

for object in objects:

values.append([o[key] for o in object])

result["objects/" + key] = values

return result

def build_sbd_metadata(data_dir, image_ids):

"""Transpose the metadata which convert from list of dict to dict of list."""

# Parallel process all the images.

image_file_paths = [os.path.join(data_dir, "img", i + ".jpg") for i in image_ids]

pool_size = 10 if len(image_ids) > 10 else len(image_ids)

with multiprocessing.Pool(pool_size) as p:

metadata = p.map(parse_single_sbd_image, image_file_paths)

keys = [

"image/filename",

"image/file_path",

"segmentation/class/file_path",

"segmentation/object/file_path",

]

result = {}

for key in keys:

values = [value[key] for value in metadata]

result[key] = values

return result

def decode_png_mask(mask):

"""Decode the raw PNG image and convert it to 2D tensor with probably

class."""

# Cast the mask to int32 since the original uint8 will overflow when

# multiplied with 256

mask = tf.cast(mask, tf.int32)

mask = mask[:, :, 0] * 256 * 256 + mask[:, :, 1] * 256 + mask[:, :, 2]

mask = tf.expand_dims(tf.gather(VOC_PNG_COLOR_MAPPING, mask), -1)

mask = tf.cast(mask, tf.uint8)

return mask

def load_images(example):

"""Loads VOC images for segmentation task from the provided paths"""

image_file_path = example.pop("image/file_path")

segmentation_class_file_path = example.pop("segmentation/class/file_path")

segmentation_object_file_path = example.pop("segmentation/object/file_path")

image = tf.io.read_file(image_file_path)

image = tf.image.decode_jpeg(image)

segmentation_class_mask = tf.io.read_file(segmentation_class_file_path)

segmentation_class_mask = tf.image.decode_png(segmentation_class_mask)

segmentation_class_mask = decode_png_mask(segmentation_class_mask)

segmentation_object_mask = tf.io.read_file(segmentation_object_file_path)

segmentation_object_mask = tf.image.decode_png(segmentation_object_mask)

segmentation_object_mask = decode_png_mask(segmentation_object_mask)

example.update(

{

"image": image,

"class_segmentation": segmentation_class_mask,

"object_segmentation": segmentation_object_mask,

}

)

return example

def load_sbd_images(image_file_path, seg_cls_file_path, seg_obj_file_path):

"""Loads SBD images for segmentation task from the provided paths"""

image = tf.io.read_file(image_file_path)

image = tf.image.decode_jpeg(image)

segmentation_class_mask = tfds.core.lazy_imports.scipy.io.loadmat(seg_cls_file_path)

segmentation_class_mask = segmentation_class_mask["GTcls"]["Segmentation"][0][0]

segmentation_class_mask = segmentation_class_mask[..., np.newaxis]

segmentation_object_mask = tfds.core.lazy_imports.scipy.io.loadmat(

seg_obj_file_path

)

segmentation_object_mask = segmentation_object_mask["GTinst"]["Segmentation"][0][0]

segmentation_object_mask = segmentation_object_mask[..., np.newaxis]

return {

"image": image,

"class_segmentation": segmentation_class_mask,

"object_segmentation": segmentation_object_mask,

}

def build_dataset_from_metadata(metadata):

"""Builds TensorFlow dataset from the image metadata of VOC dataset."""

# The objects need some manual conversion to ragged tensor.

metadata["labels"] = tf.ragged.constant(metadata["labels"])

metadata["objects/label"] = tf.ragged.constant(metadata["objects/label"])

metadata["objects/pose"] = tf.ragged.constant(metadata["objects/pose"])

metadata["objects/is_truncated"] = tf.ragged.constant(

metadata["objects/is_truncated"]

)

metadata["objects/is_difficult"] = tf.ragged.constant(

metadata["objects/is_difficult"]

)

metadata["objects/bbox"] = tf.ragged.constant(

metadata["objects/bbox"], ragged_rank=1

)

dataset = tf.data.Dataset.from_tensor_slices(metadata)

dataset = dataset.map(load_images, num_parallel_calls=tf.data.AUTOTUNE)

return dataset

def build_sbd_dataset_from_metadata(metadata):

"""Builds TensorFlow dataset from the image metadata of SBD dataset."""

img_filepath = metadata["image/file_path"]

cls_filepath = metadata["segmentation/class/file_path"]

obj_filepath = metadata["segmentation/object/file_path"]

def md_gen():

c = list(zip(img_filepath, cls_filepath, obj_filepath))

# random shuffling for each generator boosts up the quality.

random.shuffle(c)

for fp in c:

img_fp, cls_fp, obj_fp = fp

yield load_sbd_images(img_fp, cls_fp, obj_fp)

dataset = tf.data.Dataset.from_generator(

md_gen,

output_signature=(

{

"image": tf.TensorSpec(shape=(None, None, 3), dtype=tf.uint8),

"class_segmentation": tf.TensorSpec(

shape=(None, None, 1), dtype=tf.uint8

),

"object_segmentation": tf.TensorSpec(

shape=(None, None, 1), dtype=tf.uint8

),

}

),

)

return dataset

def load(

split="sbd_train",

data_dir=None,

):

"""Load the Pacal VOC 2012 dataset.

This function will download the data tar file from remote if needed, and

untar to the local `data_dir`, and build dataset from it.

It supports both VOC2012 and Semantic Boundaries Dataset (SBD).

The returned segmentation masks will be int ranging from [0, num_classes),

as well as 255 which is the boundary mask.

Args:

split: string, can be 'train', 'eval', 'trainval', 'sbd_train', or

'sbd_eval'. 'sbd_train' represents the training dataset for SBD

dataset, while 'train' represents the training dataset for VOC2012

dataset. Defaults to `sbd_train`.

data_dir: string, local directory path for the loaded data. This will be

used to download the data file, and unzip. It will be used as a

cache directory. Defaults to None, and `~/.keras/pascal_voc_2012`

will be used.

"""

supported_split_value = [

"train",

"eval",

"trainval",

"sbd_train",

"sbd_eval",

]

if split not in supported_split_value:

raise ValueError(

f"The support value for `split` are {supported_split_value}. "

f"Got: {split}"

)

if data_dir is not None:

data_dir = os.path.expanduser(data_dir)

if "sbd" in split:

return load_sbd(split, data_dir)

else:

return load_voc(split, data_dir)

def load_voc(

split="train",

data_dir=None,

):

"""This function will download VOC data from a URL. If the data is already

present in the cache directory, it will load the data from that directory

instead.

"""

extracted_dir = os.path.join("VOCdevkit", "VOC2012")

get_data = keras.utils.get_file(

fname=os.path.basename(VOC_URL),

origin=VOC_URL,

cache_dir=data_dir,

extract=True,

)

data_dir = os.path.join(os.path.dirname(get_data), extracted_dir)

image_ids = get_image_ids(data_dir, split)

# len(metadata) = #samples, metadata[i] is a dict.

metadata = build_metadata(data_dir, image_ids)

maybe_populate_voc_color_mapping()

dataset = build_dataset_from_metadata(metadata)

return dataset

def load_sbd(

split="sbd_train",

data_dir=None,

):

"""This function will download SBD data from a URL. If the data is already

present in the cache directory, it will load the data from that directory

instead.

"""

extracted_dir = os.path.join("benchmark_RELEASE", "dataset")

get_data = keras.utils.get_file(

fname=os.path.basename(SBD_URL),

origin=SBD_URL,

cache_dir=data_dir,

extract=True,

)

data_dir = os.path.join(os.path.dirname(get_data), extracted_dir)

image_ids = get_sbd_image_ids(data_dir, split)

# len(metadata) = #samples, metadata[i] is a dict.

metadata = build_sbd_metadata(data_dir, image_ids)

dataset = build_sbd_dataset_from_metadata(metadata)

return dataset

加载数据集

为了训练和评估,让我们使用“sbd_train”和“sbd_eval”。您也可以为 load 函数选择以下任何数据集:'train'、'eval'、'trainval'、'sbd_train' 或 'sbd_eval'。'sbd_train' 表示 SBD 数据集的训练数据集,而 'train' 表示 VOC2012 数据集的训练数据集。

train_ds = load(split="sbd_train", data_dir="segmentation")

eval_ds = load(split="sbd_eval", data_dir="segmentation")

预处理数据

preprocess_inputs 实用函数预处理输入,将其转换为包含图像和 segmentation_masks 的字典。图像和分割掩码都将被调整为 512x512。然后,将生成的数据集分批处理成四对图像和分割掩码的组。

def preprocess_inputs(inputs):

def unpackage_inputs(inputs):

return {

"images": inputs["image"],

"segmentation_masks": inputs["class_segmentation"],

}

outputs = inputs.map(unpackage_inputs)

outputs = outputs.map(keras.layers.Resizing(height=512, width=512))

outputs = outputs.batch(4, drop_remainder=True)

return outputs

train_ds = preprocess_inputs(train_ds)

batch = train_ds.take(1).get_single_element()

可以使用 plot_images_masks 函数可视化此预处理的输入训练数据的一个批次。此函数接受图像、分割掩码和预测掩码的批次作为输入,并将它们显示在网格中。

def plot_images_masks(images, masks, pred_masks=None):

num_images = len(images)

plt.figure(figsize=(8, 4))

rows = 3 if pred_masks is not None else 2

for i in range(num_images):

plt.subplot(rows, num_images, i + 1)

plt.imshow(images[i] / 255)

plt.axis("off")

plt.subplot(rows, num_images, num_images + i + 1)

plt.imshow(masks[i])

plt.axis("off")

if pred_masks is not None:

plt.subplot(rows, num_images, i + 1 + 2 * num_images)

plt.imshow(pred_masks[i])

plt.axis("off")

plt.show()

plot_images_masks(batch["images"], batch["segmentation_masks"])

预处理应用于评估数据集 eval_ds。

eval_ds = preprocess_inputs(eval_ds)

数据增强

Keras 提供了各种图像增强选项。在此示例中,我们将使用 RandomFlip 增强来增强训练数据集。RandomFlip 增强会随机水平或垂直翻转训练数据集中的图像。这有助于提高模型对图像中对象方向变化的鲁棒性。

train_ds = train_ds.map(keras.layers.RandomFlip())

batch = train_ds.take(1).get_single_element()

plot_images_masks(batch["images"], batch["segmentation_masks"])

模型配置

请随意修改模型训练的配置,并注意训练结果如何变化。这是一个很好的练习,可以更好地理解训练流程。

学习率调度器用于优化器为每个 epoch 计算学习率。然后,优化器使用学习率来更新模型的权重。在此情况下,学习率调度器使用余弦衰减函数。余弦衰减函数开始时较高,然后随时间减小,最终达到零。VOC 数据集的基数是 2124,批次大小为 4。数据集基数对于学习率衰减很重要,因为它决定了模型将训练多少步。初始学习率与 0.007 成正比,衰减步数为 2124。这意味着学习率将从 INITIAL_LR 开始,然后在 2124 步内减小到零。

BATCH_SIZE = 4

INITIAL_LR = 0.007 * BATCH_SIZE / 16

EPOCHS = 1

NUM_CLASSES = 21

learning_rate = keras.optimizers.schedules.CosineDecay(

INITIAL_LR,

decay_steps=EPOCHS * 2124,

)

我们将 resnet_50_imagenet 预训练权重作为模型的图像编码器,此实现既可用于 DeepLabV3,也可用于带有附加解码器块的 DeepLabV3+。对于 DeepLabV3+,我们通过提供 low_level_feature_key 作为 P2(一个用于从 resnet_50_imagenet 提取特征的金字塔级别输出,它充当解码器块)来实例化 DeepLabV3Backbone 模型。要将此模型用作 DeepLabV3 架构,请忽略 low_level_feature_key,它默认为 None。

然后我们创建 DeepLabV3ImageSegmenter 实例。num_classes 参数指定模型将被训练来分割的类别数量。preprocessor 参数用于对图像输入和掩码应用预处理。

image_encoder = keras_hub.models.Backbone.from_preset("resnet_50_imagenet")

deeplab_backbone = keras_hub.models.DeepLabV3Backbone(

image_encoder=image_encoder,

low_level_feature_key="P2",

spatial_pyramid_pooling_key="P5",

dilation_rates=[6, 12, 18],

upsampling_size=8,

)

model = keras_hub.models.DeepLabV3ImageSegmenter(

backbone=deeplab_backbone,

num_classes=21,

activation="softmax",

preprocessor=preprocessor,

)

编译模型

model.compile() 函数设置模型的训练过程。它定义了 - 优化算法 - 随机梯度下降 (SGD) - 损失函数 - 分类交叉熵 - 评估指标 - 平均 IoU 和分类准确率

语义分割评估指标

平均交并比 (MeanIoU):MeanIoU 衡量语义分割模型在图像中准确识别和描绘不同对象或区域的程度。它计算预测对象边界和实际对象边界之间的重叠,得分在 0 到 1 之间,其中 1 表示完美匹配。

分类准确率:分类准确率衡量图像中正确分类的像素的比例。它给出一个简单的百分比,指示模型预测整个图像中像素类别的准确程度。

本质上,MeanIoU 强调识别特定对象边界的准确性,而分类准确率则对整体像素级正确性进行广泛概述。

model.compile(

optimizer=keras.optimizers.SGD(

learning_rate=learning_rate, weight_decay=0.0001, momentum=0.9, clipnorm=10.0

),

loss=keras.losses.CategoricalCrossentropy(from_logits=False),

metrics=[

keras.metrics.MeanIoU(

num_classes=NUM_CLASSES, sparse_y_true=False, sparse_y_pred=False

),

keras.metrics.CategoricalAccuracy(),

],

)

model.summary()

Preprocessor: "deep_lab_v3_image_segmenter_preprocessor"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Config ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┩ │ deep_lab_v3_image_converter (DeepLabV3ImageConverter) │ Image size: (512, 512) │ └───────────────────────────────────────────────────────────────┴──────────────────────────────────────────┘

Model: "deep_lab_v3_image_segmenter"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━┩ │ inputs (InputLayer) │ (None, None, None, 3) │ 0 │ ├───────────────────────────────────────────────┼────────────────────────────────────┼─────────────────────┤ │ deep_lab_v3_backbone (DeepLabV3Backbone) │ (None, None, None, 256) │ 39,190,656 │ ├───────────────────────────────────────────────┼────────────────────────────────────┼─────────────────────┤ │ segmentation_output (Conv2D) │ (None, None, None, 21) │ 5,376 │ └───────────────────────────────────────────────┴────────────────────────────────────┴─────────────────────┘

Total params: 39,196,032 (149.52 MB)

Trainable params: 39,139,232 (149.30 MB)

Non-trainable params: 56,800 (221.88 KB)

dict_to_tuple 实用函数有效地将训练和验证数据集的字典转换为图像和独热编码的分割掩码的元组,这在 DeepLabv3+ 模型的训练和评估期间使用。

def dict_to_tuple(x):

return x["images"], tf.one_hot(

tf.cast(tf.squeeze(x["segmentation_masks"], axis=-1), "int32"), 21

)

train_ds = train_ds.map(dict_to_tuple)

eval_ds = eval_ds.map(dict_to_tuple)

model.fit(train_ds, validation_data=eval_ds, epochs=EPOCHS)

1/Unknown 40s 40s/step - categorical_accuracy: 0.1191 - loss: 3.0568 - mean_io_u: 0.0118

2124/2124 ━━━━━━━━━━━━━━━━━━━━ 281s 114ms/步 - categorical_accuracy: 0.7286 - loss: 1.0707 - mean_io_u: 0.0926 - val_categorical_accuracy: 0.8199 - val_loss: 0.5900 - val_mean_io_u: 0.3265

<keras.src.callbacks.history.History at 0x7fd7a897f8d0>

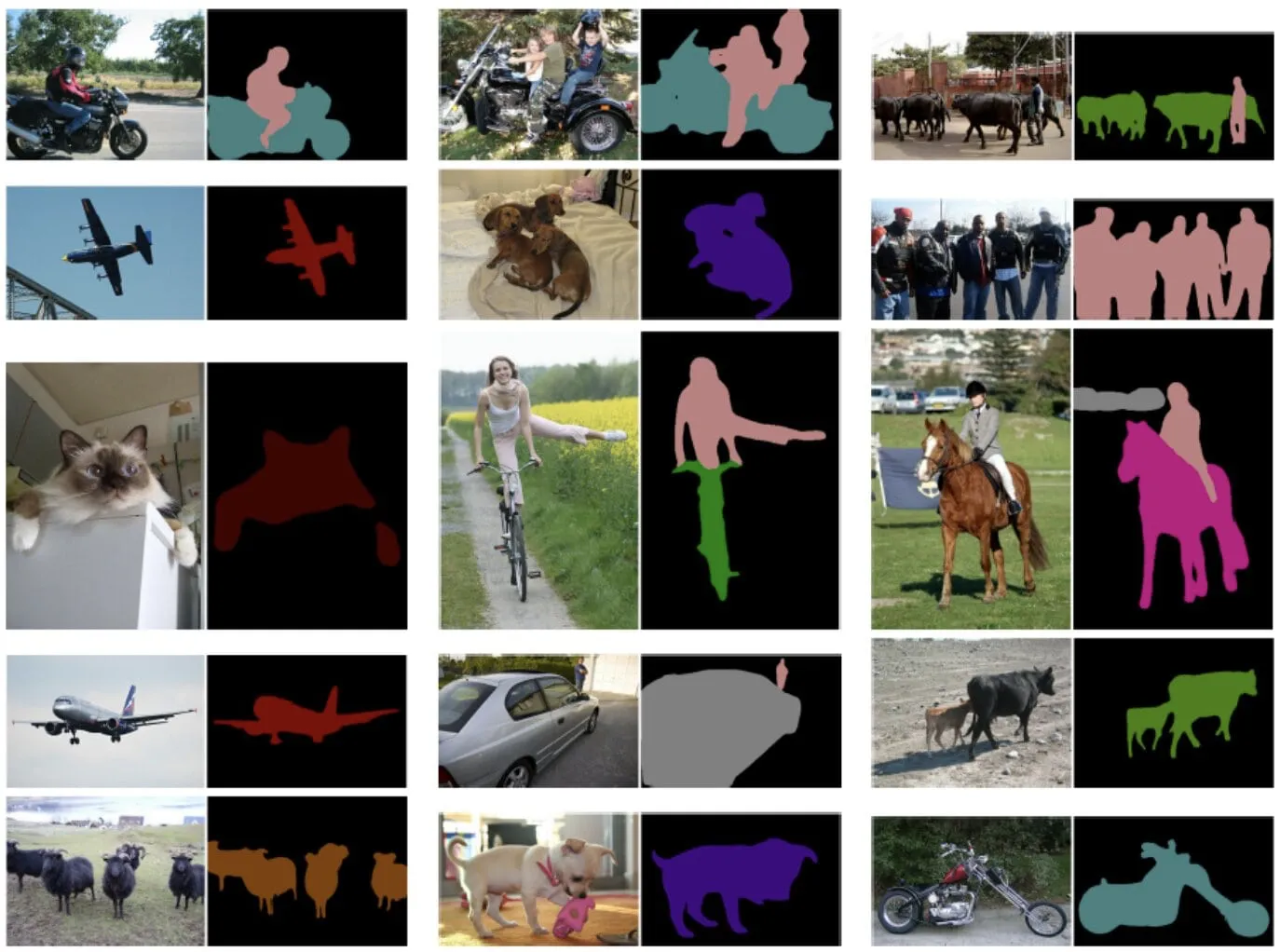

使用训练好的模型进行预测

DeepLabv3+ 的模型训练现已完成,让我们通过对一些样本图像进行预测来测试它。注意:为演示起见,模型仅训练了 1 个 epoch,为了获得更好的准确性和结果,请训练更多 epoch。

test_ds = load(split="sbd_eval")

test_ds = preprocess_inputs(test_ds)

images, masks = next(iter(test_ds.take(1)))

images = ops.convert_to_tensor(images)

masks = ops.convert_to_tensor(masks)

preds = ops.expand_dims(ops.argmax(model.predict(images), axis=-1), axis=-1)

masks = ops.expand_dims(ops.argmax(masks, axis=-1), axis=-1)

plot_images_masks(images, masks, preds)

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 3s/步

1/1 ━━━━━━━━━━━━━━━━━━━━ 3s 3s/步

以下是使用 KerasHub DeepLabv3 模型的一些额外技巧

- 该模型可以在各种数据集上进行训练,包括 COCO 数据集、PASCAL VOC 数据集和 Cityscapes 数据集。

- 该模型可以在自定义数据集上进行微调,以提高其在特定任务上的性能。

- 该模型可用于对图像执行实时推理。

- 此外,还可以查看 KerasHub 的其他分割模型。